Security misconfiguration is a worrying problem, occupying fifth place in the OWASP Top 10. In fact, we frequently encounter many vulnerabilities of this type during our web application penetration tests. Furthermore, this security issue affects a large number of web applications (90% according to OWASP).

In this article, we present this type of vulnerability through the prism of the OWASP Top 10, using attack scenarios. We also detail the best practices and measures to be implemented to protect against them.

What are the causes of security misconfigurations?

Security misconfiguration vulnerabilities are often the result of a lack of clarity in the documentation that comes with the tools and platforms used to develop web applications.

Sometimes developers misinterpret security parameters or do not fully understand the implications of their configuration choices.

Furthermore, some products have vulnerable default settings. As a result, users, through ignorance or negligence, may not change these default settings, leaving gaping security holes in their applications.

To better understand the impact of security misconfiguration, we’re going to look at two concrete examples. These examples will illustrate how configuration errors can potentially compromise the security of web applications and put user data at risk.

Exploiting security misconfigurations on web applications

Spring Boot Actuator

During a black box penetration test, we identified that the target web application used the ‘Spring Boot’ framework. This conclusion was reached by inspecting the company’s GitHub account, where we noticed that several projects were based on this framework.

In fact, we assumed that it was likely that the developers had activated the “Spring Boot Actuator” module, which is a sub-project of the “Spring Boot” framework. This module offers the ability to monitor and manage an application by exposing specific HTTP endpoints.

However, these exposed endpoints can quickly become critical, as they can be used to access log files, perform memory dumps and so on. Furthermore, in versions prior to the 1.5 update, the sensitive endpoints in this module are accessible without requiring authentication by default. And even though versions after 1.5 secure these sensitive endpoints, developers sometimes disable this security.

In the case of our pentest, we observed that the majority of interactions with the application took place via an API. This API can be a key target, as it may well be monitored by the developers.

Brute force of API endpoints and recovery of user cookies

Our aim was to brute force all API endpoints with a list containing Spring Boot actuators.

To do this, we compiled an exhaustive list of all the API endpoints by extracting this information directly from the application’s JavaScript files.

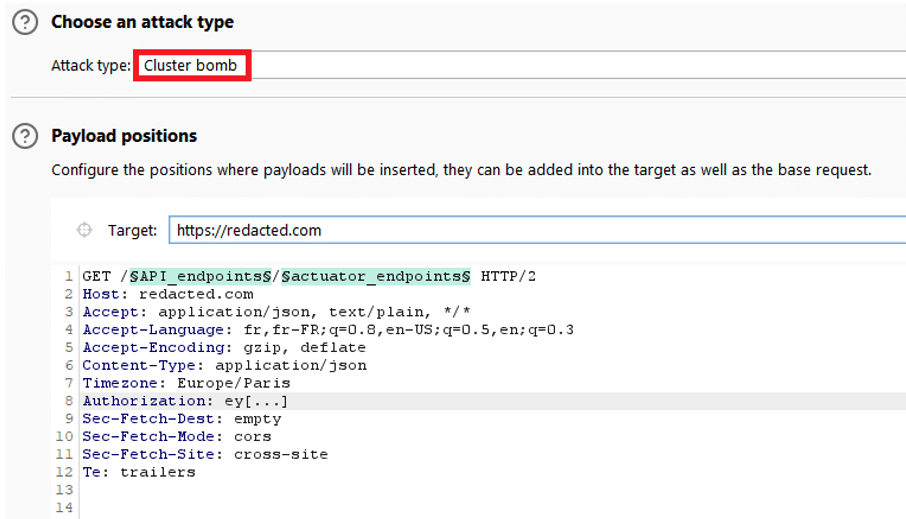

We then brute force each of these endpoints with a list containing the Spring Boot Actuators.

There are several methods for performing a brute force attack. Here, we used the ‘Cluster Bomb’ attack from the Burp Suite tool.

Generally, several exploits are possible. During our penetration test, we found the endpoint heapdump exposed.

The heapdump is a copy of the server’s memory at time T.

At the end of our attack, we find ourselves with the “/heapdump” endpoint accessible on one of the API endpoints. This endpoint makes a copy of the RAM at time T.

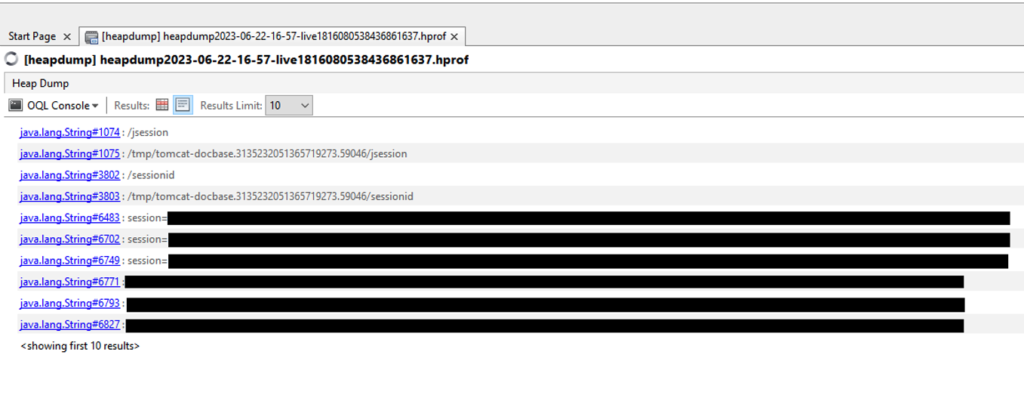

After downloading the ‘heapdump’, which takes the form of an hprof.gz file, we can use this data with VisualVM, a tool that allows us to interact with the data in our file on the command line.

As the file is quite large, we have targeted the keywords likely to contain the most critical information.

In our case, we managed to collect session cookies from all users.

How to prevent this type of exploitation?

The official Spring documentation recommends protecting sensitive endpoints using the “spring security” framework, which enables authentication and access control to be added to the various endpoints.

S3 bucket misconfiguration

Amazon S3 (Simple Storage Service) is an online storage service that offers the possibility of storing various types of data in the cloud.

To better understand the terminology behind Amazon S3, it is important to note that data is organised and stored as objects inside containers called buckets. Each object is uniquely identifiable thanks to an “object key”, which serves as an identifier within a bucket.

With regard to the security of the data stored in these buckets, Amazon S3 offers several mechanisms for managing authorisations and access controls, which can vary depending on the context and the specific needs of the application.

- IAM policies (Identity and Access Management policies) are used to define user and resource access rights for all AWS actions.

- S3 bucket policies are specific to buckets and allow you to add access control rules at the level of the bucket itself. These policies define who can access the bucket and what actions they can perform.

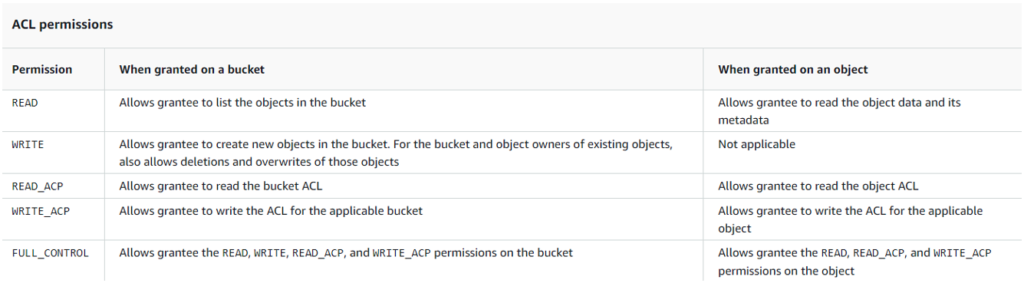

- S3 ACLs add a further level of granularity by allowing access controls to be defined at the level of individual objects within a bucket. This means that different authorisations can be specified for each object, in addition to the access controls defined at bucket level.

Exploiting a broken access control vulnerability

During a grey box pentest, while inspecting the HTML source code of the application, we identified that an S3 Bucket was being used to store the resources associated with our account.

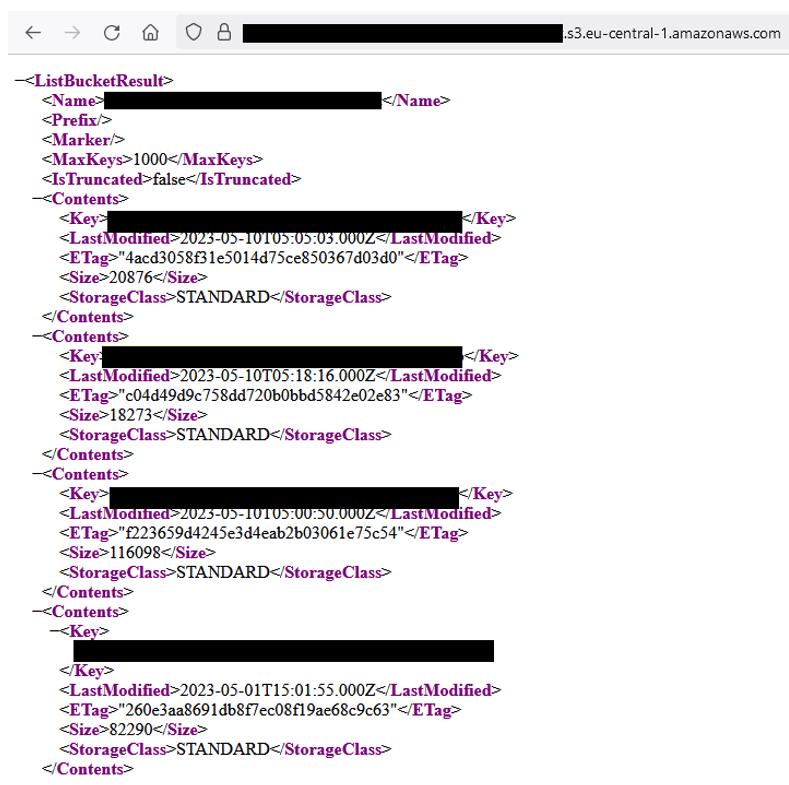

Generally, the URL of an S3 Bucket follows the standard format: “https://name_bucket.s3-region-.amazonaws.com/”, or “bucket_name” is the unique name of the Bucket, and “region” indicates the geographical region in which the Bucket is located.

And when we come across Amazon Buckets, we systematically test the access rights to see if they are too permissive. Amazon’s documentation is fairly clear and tells us all the permissions that can be granted.

To test these permissions, use the Amazon command line interface. Note that you need to have added the Amazon API keys to the CLI configuration files.

We will make all our requests anonymously using the “-no-sign-request” option.

Read access is used to list all the objects in the bucket.

aws s3 ls s3://bucket_name --no-sign-requestWrite access allows you to create, delete and modify bucket objects.

aws s3 cp file.txt s3://bucket_name/file.txt --no-sign-requestThe “READ_ACP” permission allows you to read the bucket’s ACLs.

aws s3api get-bucket-acl --bucket bucket_name --no-sign-requestSimilarly, the “WRITE_ACP” permission allows ACLs to be written to the bucket.

aws s3api put-bucket-acl --bucket bucket_name --access-control-policy file://acl.json --no-sign-requestIn the case of our pentest, we had anonymous read access activated, which enabled us to list all the object keys. As the objects were not pre-signed, we had access to all the files stored in the bucket.

The impact of this configuration problem is linked to the data stored in the bucket. In our case, the bucket was used to store sensitive user data.

How to fix access controls?

To fix the access control problems, we need to partition the bucket’s permissions so that all external users can no longer access the resources.

This involves :

- Removing anonymous read and write access to the bucket

- Removing anonymous read and write access to ACLs (read_acp / write_acp permissions)

- Use of pre-signed URLs

Author : Yacine DJABER – Pentester @Vaadata